One of challenges with implementing VXLAN is configuring Multicast on physical switches to support BUM traffic. With the release of NSX for vSphere, VXLAN can be deployed to work in Unicast mode with the help of the NSX controller. In this article we will look at deploying VXLAN with NSX and integrating vCloud Director to create logical networks.

This article assumes that vCloud Director is already installed. vCloud Director should be version 5.5.0 or higher

Deploying NSX Manager

First download the NSX Manager and deploy the OVF image on the Management Cluster. The deploy OVF wizard will require IP address details for the NSX Manager.Once the NSX manager is deployed connect to the WebUI of the NSX manager to register NSX Manager with vCenter Server and the vCenter Lookup Service. Once NSX Manager is successfully registered with vCenter the Networking & Security tab is displayed in the Web Client. All NSX configuration will be done using the Web Client. Under the Network & Security plugin the NSX Manager should be listed.

Note: An important caveat here is to use a user account with administrator privileges to register vCenter, else the NSX Manager will not displayed in the Web Client.

Deploying the NSX Controller

The next step is to deploy the NSX controller. Under the NSX Controller Nodes section, Click the ‘+’ to add the first NSX controller. The NSX controller provides a control plane to distribute network information down to the ESX hosts. The Controller can be clustered by deploying additional controllers to support scale out architecture and high availability.

Prepare ESX hosts for VXLAN

To start deploying logical networks the ESX hosts need to be prepared for VXLAN. Under Installation->Host Preparation, Click Install against the cluster that will be prepared. The installation process pushes VXLAN vibs to the ESX hosts and enables the Distributed Firewall.

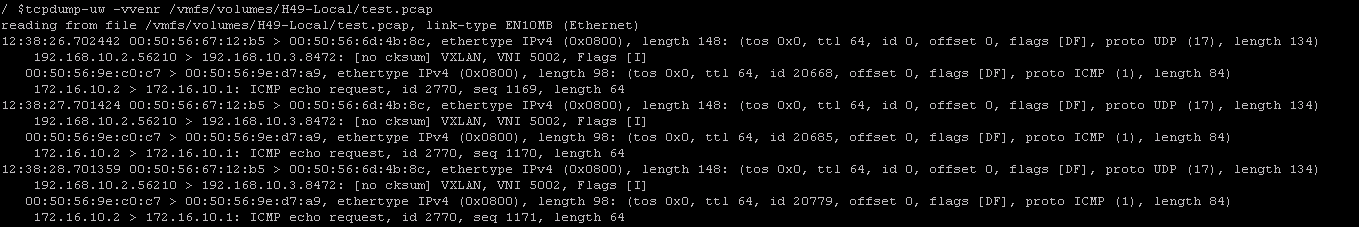

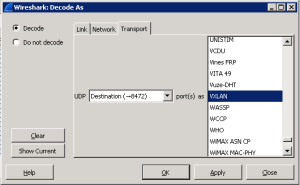

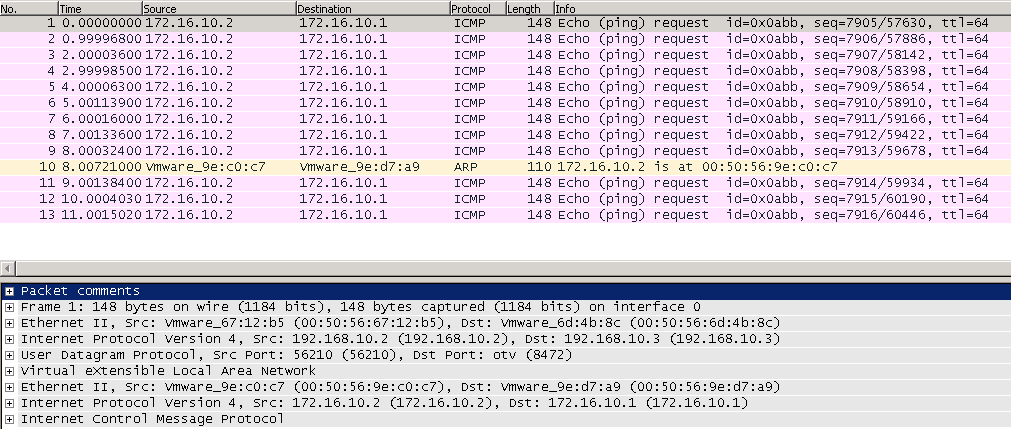

Once the ESX hosts go into ‘Ready’ status we can configure VXLAN. Select the Virtual Distributed Switch, if VXLAN traffic needs to be isolated in a VLAN enter the VLAN number. Default MTU of 1600 should suffice, Either an IP pool or DHCP can be used to assign IP addresses to the VTEP interface that gets created as part of the VXLAN configuration. Select the teaming policy for VTEP load balancing and high availability

Logical Network Preparation

Once the ESX hosts are prepared and ready the transport zone and Segment ID needs to be created. Under Logical Network Preparation->Segment ID enter the Segment ID pool. We do not need Multicast IP addresses since we will use Unicast as the Control Plane mode.

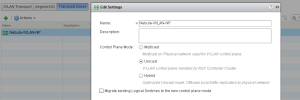

Typically a Transport zone and the Control Plan mode is also defined at this stage but since we are integrating vCloud Director we will allow vCloud Director to create the the transport zone.

vCloud Director Configuration

At this stage there should be a VXLAN Network pool created by default in vCloud Director, this should be in error state since VXLAN was not per-configured. Right Click the network pool and choose repair to recreate the transport zone. Once complete there should now be a transport zone created under the logical network preparation tab. Edit the transport zone and change the Control Plane mode to Unicast.

Consuming Logical Networks

The network pool that was created can now be assigned to a vCloud Virtual Data Center. To start consuming VXLAN logical networks an Edge Gateway and a Routed network needs be deployed within the organization. When the Routed network is created a logical switch with the segment ID is created as a port group in vCenter. Virtual machines can now be deployed and connected to the Routed network and use VXLAN as the underlying transport.

I hope you found this article useful. Questions or comments are welcome!